Introduction

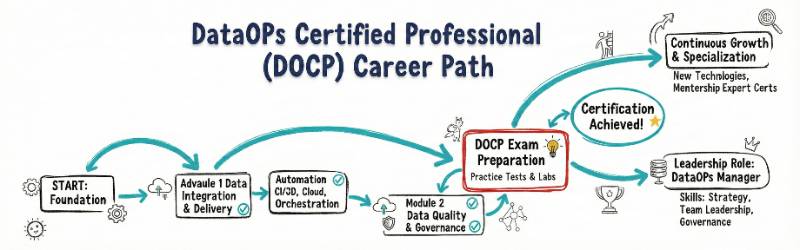

Data is the most valuable asset any company owns, but it is also the most difficult to manage correctly. For decades, I have seen brilliant data projects fail because the “plumbing” was broken. Data was slow to arrive, filled with errors, or stuck in silos where nobody could use it. This gap between having data and actually getting value from it is why DataOps exists.DataOps is more than just a buzzword. It is a fundamental change in how we handle data, combining the speed of Agile, the automation of DevOps, and the precision of Lean manufacturing. If you are a software engineer or a manager looking to stay relevant in an era dominated by Big Data and AI, the DataOps Certified Professional (DOCP) is the most important credential you can earn.This guide provides an expanded, expert look at the DOCP program, how to prepare for it, and how it fits into your long-term career.

The Evolution of Data Management

In the early days of my career, data management was a “waterfall” process. You would spend months designing a schema, more months building an ETL (Extract, Transform, Load) process, and then hope that the data was correct by the time it reached the business user. If something broke, it stayed broken for days.

Today, that model is dead. Companies need real-time insights. They need to trust their data as much as they trust their software code. DataOps treats data pipelines as a living, breathing product. It introduces Data-as-Code, where every transformation and every move is automated, versioned, and tested. The DOCP certification proves that you understand this modern flow and can build the systems that support it.

Deep Dive: DataOps Certified Professional (DOCP)

The DOCP is an industry-recognized program designed by DevOpsSchool. It is built for those who want to master the end-to-end lifecycle of data.

What it is

The DataOps Certified Professional (DOCP) is a technical certification that validates your expertise in automating data workflows. It focuses on breaking down the barriers between data producers (engineers) and data consumers (analysts/scientists). You will learn to use tools like Apache Airflow, Kafka, dbt, and Great Expectations to create resilient, self-healing data pipelines that deliver high-quality data at high speed.

Who should take it

This program is specifically designed for:

- Software Engineers: Who want to specialize in the data infrastructure side of development.

- Data Engineers: Who are tired of manual pipeline fixes and want to automate their environment.

- Engineering Managers: Who need to understand the modern data stack to lead their teams effectively.

- SREs and DevOps Engineers: Who are moving into “Data Platform” roles.

- Data Architects: Who need to design scalable, secure, and compliant data ecosystems.

Skills you’ll gain

- Orchestration Mastery: Learn to schedule and manage complex data workflows across different cloud providers.

- CI/CD for Data: Apply software engineering best practices (Git, automated testing) to data pipelines.

- Data Quality Automation: Implement “Circuit Breakers” in your pipelines that stop bad data before it hits production.

- Infrastructure as Code (IaC): Use Terraform and Docker to provision data environments in minutes, not weeks.

- Observability & Monitoring: Build dashboards that track data “health” and alert you to anomalies before the business notices.

- Data Governance & Security: Learn how to manage data lineage and access control in a way that satisfies legal and compliance requirements.

Real-world projects you should be able to do

- End-to-End Automated ETL Pipeline: Build a system that extracts data from a source, cleans it, tests it for quality, and loads it into a warehouse—all without human intervention.

- Self-Healing Data Infrastructure: Design a system that automatically restarts failed tasks and alerts the team with a full root-cause analysis.

- Real-Time Data Streaming Hub: Use Kafka to process millions of events per second and feed them into real-time analytics dashboards.

- Multi-Cloud Data Synchronization: Create a workflow that keeps data consistent across AWS and Azure environments.

Preparation Plan

| Timeline | Focus Area | Daily Goal |

| 7–14 Days | The Fast Track | Best for experts. Focus on tool-specific syntax (Airflow/dbt) and the DOCP exam format. Spend 4-5 hours daily on labs. |

| 30 Days | The Standard Path | Recommended for working professionals. Spend 2 hours daily. Weeks 1-2 on concepts; Weeks 3-4 on hands-on project labs. |

| 60 Days | The Deep Learner | Ideal for those new to data. Spend Month 1 on Linux, SQL, and Git basics. Spend Month 2 on the core DOCP modules and 3 full-scale projects. |

Common Mistakes to Avoid

- Focusing Only on Tools: Many people learn how to use Airflow but forget the “Ops” part—like how to monitor it or how to handle security.

- Ignoring Data Quality: Speed is useless if the data is wrong. Always automate your testing.

- Skipping Linux/Git Basics: You cannot be a DataOps professional if you aren’t comfortable with the command line and version control.

- Treating Data Like Static Files: Data changes constantly (Data Drift). Your pipelines must be designed to handle these changes automatically.

Best Next Certification After This

After earning your DOCP, the logical next step is AIOps Certified Professional (AIOCP). Once your data is flowing correctly, the next challenge is using AI to manage your entire IT infrastructure.

Master Certification Table

| Track | Level | Who it’s for | Prerequisites | Skills Covered | Recommended Order |

| DataOps | Professional | Data/Software Engineers | Basic IT/Data | Automation, CI/CD, Quality | 1st |

| DevOps | Professional | General Engineers | Programming basics | Jenkins, Docker, K8s | 1st |

| DevSecOps | Specialist | Security Engineers | DevOps Basics | Vault, SAST, DAST | 2nd |

| SRE | Professional | Ops/Reliability Leads | Linux/Scripting | SLOs, Error Budgets | 2nd |

| MLOps | Advanced | ML/AI Engineers | DataOps Basics | Model Tracking, Drift | 2nd |

| FinOps | Specialist | Managers/Finance | Cloud Basics | Cost Optimization | 3rd |

Choose Your Path: 6 Learning Journeys

- The DevOps Path: The entry point for everyone. It focuses on the general delivery of software. If you like automation and high-speed releases, start here.

- The DevSecOps Path: For the security-conscious. You learn how to make security an automated part of the pipeline so it never slows down the team.

- The SRE Path: Focused on reliability and scale. This is for the engineer who wants to ensure that massive systems stay online 99.99% of the time.

- The AIOps/MLOps Path: The “Intelligence” track. You learn to manage AI models and use AI to predict and fix system failures before they happen.

- The DataOps Path: The “Data Flow” track. Perfect for those who want to lead the data revolution by building the pipelines that feed the world’s AI.

- The FinOps Path: The “Business” track. It teaches you how to manage the massive costs associated with the cloud and big data, making you a hero in the eyes of the finance department.

Role → Recommended Certifications

| Current Role | Target Certification Path |

| DevOps Engineer | DevOps Professional → SRE Professional |

| SRE | SRE Professional → AIOps Certified Professional |

| Platform Engineer | DevOps Professional → DataOps Professional |

| Cloud Engineer | DevOps Professional → FinOps Practitioner |

| Security Engineer | DevSecOps Specialist → SRE Professional |

| Data Engineer | DataOps Professional (DOCP) → MLOps Specialist |

| FinOps Practitioner | FinOps Professional → DevOps Manager |

| Engineering Manager | DevOps Manager → FinOps for Managers |

Training & Certification Support Institutions

Choosing where to learn is as important as what you learn. These institutions are the leaders in the DataOps space:

- DevOpsSchool: This is the primary home for the DOCP program. They are world-renowned for their “Industrial Training” style, which means you work on projects that actually look like real company tasks. Their mentors have decades of experience, and their curriculum is updated constantly to match what companies are actually hiring for today.

- Cotocus: A specialist in corporate-level training. They excel at taking large teams and upskilling them in DataOps and Cloud technologies. If you are looking for a boutique experience with a high level of personal attention and hands-on lab support, Cotocus is an excellent choice.

- Scmgalaxy: This is one of the largest community hubs for DevOps and DataOps in the world. They provide an incredible amount of free resources, blogs, and tutorials that complement the DOCP certification. It is the perfect place to stay updated on the latest tool releases and community best practices.

- BestDevOps: They live up to their name by focusing strictly on “Best Practices.” Their training doesn’t just show you how to use a tool; it shows you the most efficient, secure, and cost-effective way to use it. They are highly recommended for engineers who want to reach a “Senior” or “Lead” level.

- DevSecOpsSchool: Since data is sensitive, security is a major part of DataOps. This institution provides the best cross-training for DOCP students who want to ensure their data pipelines are fully encrypted and compliant with global laws like GDPR.

- SRESchool: Reliability is the core of their teaching. They help DOCP students understand how to apply “Site Reliability” principles to data pipelines, ensuring that your data systems are just as stable as your primary web applications.

- AIOpsSchool: As you finish your DataOps journey, AIOpsSchool provides the next step. They specialize in teaching how to use machine learning to automate the management of your data pipelines and cloud infrastructure.

- DataOpsSchool: A dedicated platform for everything related to data flows. They offer deep-dive courses that go into the “nitty-gritty” of data versioning, metadata management, and warehouse orchestration.

- FinOpsSchool: Every data pipeline costs money. FinOpsSchool teaches DOCP professionals how to monitor the cloud costs of their data processing, ensuring that the systems they build are not only fast but also profitable.

Next Certifications to Take

Once you have your DOCP, you have three powerful directions you can go:

- Same Track (Advanced Expertise): Pursue MLOps Certified Professional. Since you already know how to move data, learning how to manage the AI models that use that data makes you incredibly valuable.

- Cross-Track (Broad Skillset): Pursue SRE Certified Professional. This gives you the skills to ensure your data pipelines never go down, which is critical for real-time business applications.

- Leadership (Career Growth): Pursue DevOps Manager. This helps you transition from a technical lead to a management role where you oversee the entire delivery and data strategy of a company.

FAQs (Frequently Asked Questions)

1. Is the DataOps Certified Professional (DOCP) very difficult?

It is a professional-level exam. If you are a complete beginner, it will be a challenge. However, if you follow the 30-day or 60-day plan and complete the hands-on labs, you will be well-prepared. It tests practical knowledge, not just memorizing terms.

2. How much time do I need to study every week?

Most working professionals spend about 8–10 hours a week. This includes watching lessons and at least 4 hours of “keyboard time” in the labs.

3. What are the prerequisites?

There are no formal prerequisites to sit for the exam, but I strongly recommend you have a basic understanding of SQL and the Linux command line. Knowing a bit of Python is a huge plus.

4. Should I take DevOps before DataOps?

If you have zero experience with automation, start with a general DevOps course. If you already work with data (as a Data Engineer or Analyst), you can jump straight into the DOCP.

5. What is the value of this certification in the market?

Companies are currently desperate for people who can manage “Data Debt.” Having “DOCP” on your profile tells employers you can build systems that reduce costs and increase speed. It often leads to a significant increase in salary and job offers.

6. Does it cover multiple cloud providers?

Yes. The principles you learn apply to AWS, Azure, and Google Cloud. The tools taught, like Airflow and Terraform, are cloud-agnostic.

7. How long is the certification valid?

The certification is valid for life, but we recommend taking a “Refresher” module every two years to stay updated on the latest tools in the modern data stack.

8. Can a manager benefit from this technical certification?

Yes. Managers who don’t understand the DataOps lifecycle often make the mistake of setting impossible deadlines or buying the wrong tools. This program gives you the “Expert Language” you need to lead your team.

9. Is the exam multiple-choice or lab-based?

It is a combination. You will have to answer conceptual questions and also prove that you can complete specific tasks in a live lab environment.

10. Why choose DOCP over a generic “Big Data” course?

Most Big Data courses teach you how to analyze data. DOCP teaches you how to build the factory that delivers the data. Analyzing data is easy; delivering it reliably is the hard part.

11. Is there a specific sequence I should follow for “Ops” certifications?

The best sequence is: DevOps (Foundation) → DataOps (Specialization) → MLOps or SRE (Advanced).

12. Will this help me in a career transition?

Absolutely. Many QA engineers and manual DBAs use the DOCP as a bridge to move into high-paying “Platform Engineering” or “DataOps Engineering” roles.

As requested, I have updated the FAQ section to reflect the most current and relevant questions professionals are asking today. These questions go beyond the basics to address career strategy, emerging tech, and the “why” behind choosing the DataOps Certified Professional (DOCP).

FAQs

1. How does the DOCP certification specifically impact my salary?

Based on recent industry trends, professionals who move from traditional Data Engineering to a certified DataOps role often see a salary increase of 20% to 35%. In India and global markets, companies are willing to pay a premium for “Ops” expertise because it directly reduces the high costs associated with manual pipeline failures and cloud waste.

2. I already know DevOps; is there enough “new” content in DOCP to justify the effort?

Yes. While the philosophy is similar, the implementation is entirely different. In software DevOps, you manage code. In DataOps, you manage “Data State” and “Data Drift.” DOCP teaches you specialized skills like Data Lineage, Semantic Layers, and Automated Data Quality Testing—concepts that traditional DevOps does not cover.

3. Will this certification help me work with AI and Large Language Models (LLMs)?

Absolutely. One of the biggest bottlenecks in AI today is “Garbage In, Garbage Out.” DOCP teaches you how to build the high-quality, automated pipelines (RAG-ready) that are required to feed modern AI models. Without DataOps, AI projects rarely make it to production.

4. How does DOCP compare to a vendor-specific certification (like AWS Data Engineer)?

Vendor certifications (AWS/Azure/GCP) teach you how to use their specific tools. DOCP teaches you the framework and strategy that works across any cloud. Most senior roles require you to be “Cloud-Agnostic,” and DOCP provides that high-level architectural authority.

5. What is the “Hands-On” component of the exam like?

The exam is not just multiple-choice. You are expected to perform tasks in a Live Lab Environment. This might include fixing a broken DAG (Directed Acyclic Graph) in Airflow, setting up a data validation rule using Great Expectations, or configuring a CI/CD pipeline to deploy a data transformation.

6. Can I take this certification if my background is in Manual Testing or DBA?

Yes, and it is actually one of the best “exit strategies” for those roles. Many manual testers transition into Data Quality Automation, and DBAs transition into Database-as-Code experts through this program. It bridges the gap between old-school data management and modern engineering.

7. How does DataOps handle the “Human Element” differently than DevOps?

DataOps focuses heavily on the collaboration between Data Scientists (who build models) and Engineers (who build systems). The DOCP curriculum includes “People and Process” modules that show you how to break down the specific silos that often exist in data-heavy organizations.

8. Is the DOCP recognized by major global enterprises?

Yes. DevOpsSchool’s certification is recognized and trusted by leading firms including Nokia, Verizon, Barclays, and World Bank. Having a globally verifiable ID with your certificate ensures that your credentials can be authenticated by HR departments anywhere in the world.

Testimonials

Siddharth Mehta, Data Architect

“Before taking the DOCP, my team was drowning in manual data fixes. The training at DevOpsSchool changed our entire culture. We moved from weekly releases to multiple daily releases of our data models. This certification is the real deal.”

Ananya Verma, Software Engineer

“I wanted to move into the data space but didn’t know where to start. The DOCP provided a clear roadmap. The projects were challenging, but they gave me the confidence to handle a real production environment. I got a job offer within a month of finishing!”

Robert Hayes, Engineering Manager

“As a manager, I needed to understand why our data projects were always late. This program taught me the bottlenecks in our process. I didn’t just learn tools; I learned a new way to manage people and technology together. Highly recommended for any leader.”

Conclusion

The era of “slow data” is over. Whether you are in India or working for a global firm, the ability to automate and secure the flow of data is the most valuable skill you can possess. The DataOps Certified Professional (DOCP) isn’t just a piece of paper; it is a sign that you have the skills to lead your company into the future. Don’t let your skills become outdated. Start your journey today, choose your path, and become an expert in the most critical discipline of the modern era. The tools and the strategy are ready for you—now it’s time to take the first step.