Introduction

Artificial Intelligence (AI) has advanced rapidly, with machine learning (ML) models at the core of breakthroughs in fields such as healthcare, finance, and robotics. However, as data grows in complexity and volume, traditional computers are reaching their computational limits. Quantum computing, leveraging the principles of quantum mechanics, is emerging as a game-changer poised to transform the landscape of machine learning. This comprehensive tutorial explores how quantum computing is shaping the future of AI, providing both foundational knowledge and practical guidance for practitioners and enthusiasts.

1. Foundations of Quantum Computing and Machine Learning

What is Quantum Computing?

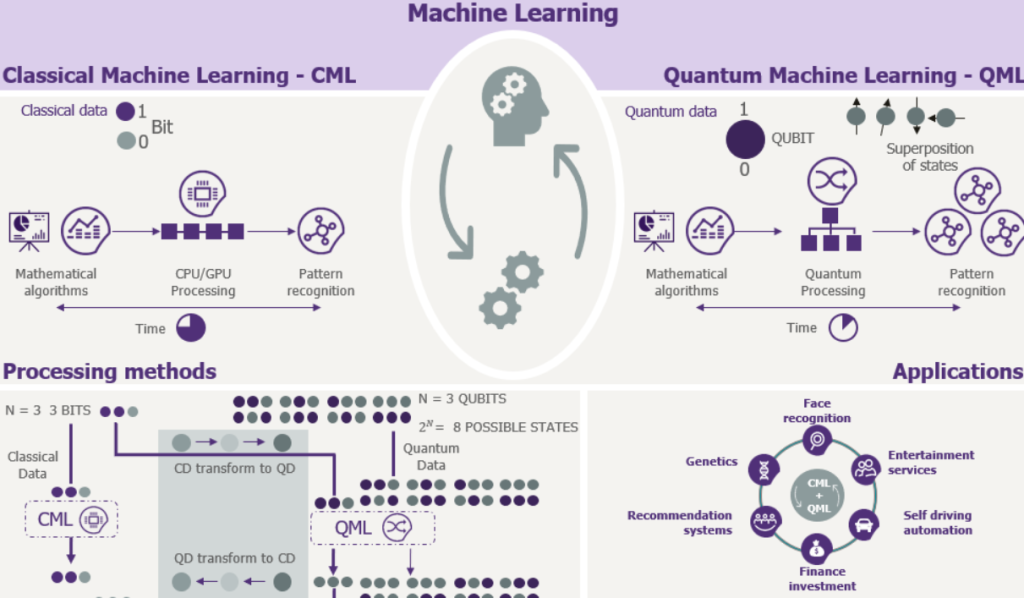

Quantum computing uses quantum bits (qubits) that can exist in multiple states simultaneously, thanks to superposition and entanglement. This enables quantum computers to process and analyze information in ways that classical computers cannot.

Key Quantum Principles:

- Superposition: Qubits can represent both 0 and 1 at the same time, allowing parallel computations.

- Entanglement: Qubits can be correlated in such a way that the state of one instantly influences another, regardless of distance.

- Quantum Interference: Quantum systems can amplify correct answers and cancel out incorrect ones through interference.

Machine Learning Recap:

Machine learning involves algorithms that learn from data to make predictions or decisions. Classical ML models—such as support vector machines, neural networks, and clustering algorithms—have driven much of AI’s progress but are increasingly constrained by classical hardware.

2. Quantum Machine Learning (QML): Bridging Two Worlds

Quantum Machine Learning (QML) integrates quantum algorithms with ML models to achieve faster, more efficient, and potentially more powerful learning systems. QML is not just about speed; it also opens new avenues for model expressivity and generalization.

Tutorial Structure and Key Topics:

Leading tutorials in the field, such as those by Yuxuan Du and colleagues, recommend a structured approach to QML.

- Classical Foundations: Understand classical ML algorithms before exploring their quantum counterparts.

- Quantum Model Construction: Learn how classical models (like neural networks and support vector machines) are adapted for quantum computers.

- Theoretical Analysis: Study the expressivity, trainability, and generalization of QML models.

- Practical Implementations: Get hands-on with code demonstrations and real-world datasets.

- Frontier Topics: Explore open challenges and future directions in QML.

3. Key Quantum Algorithms Enhancing Machine Learning

Quantum Support Vector Machines (QSVM):

QSVMs use quantum kernel methods to classify complex, high-dimensional data much faster than classical SVMs. Quantum computers can efficiently compute inner products in large feature spaces, enabling superior classification performance

Quantum Principal Component Analysis (QPCA):

QPCA leverages quantum states to perform dimensionality reduction on massive datasets, making it possible to extract key features and patterns that would be computationally prohibitive for classical algorithms.

Quantum Optimization:

Optimization is at the heart of training ML models. Quantum optimization algorithms, such as the Quantum Approximate Optimization Algorithm (QAOA), can explore large solution spaces more efficiently, leading to faster convergence and potentially better solutions.

Quantum Neural Networks (QNN):

QNNs are quantum analogs of classical neural networks, utilizing quantum circuits to represent and process information. They promise faster training and inference, especially for complex, high-dimensional data.

Quantum Transformers:

Inspired by classical transformers, quantum transformers are being developed to handle sequential data and natural language processing tasks, with the potential for exponential speedups.

4. Practical Applications and Code Demonstrations

Hands-on Learning:

Modern tutorials provide code examples for implementing quantum ML models using frameworks like Qiskit, PennyLane, and Cirq. Example projects include:

- Quantum Kernel SVM for Image Classification: Classify images from datasets like MNIST using quantum-enhanced SVMs.

- Quantum Classifier for Structured Data: Apply quantum classifiers to datasets such as the Wine dataset.

- Quantum Generative Models: Explore quantum GANs for generating synthetic data.

Step-by-Step Tutorial Example:

- Install Quantum Computing Libraries:

- Choose a framework (e.g., Qiskit, PennyLane).

- Prepare Data:

- Encode classical data into quantum states using quantum feature maps.

- Build Quantum Circuit:

- Design the quantum circuit for your chosen ML algorithm (e.g., QSVM or QNN).

- Train the Model:

- Use hybrid quantum-classical optimization to train the model.

- Evaluate Performance:

- Measure accuracy, loss, and other metrics, and compare with classical baselines.

5. Theoretical Insights and Learning Theory

Expressivity and Trainability:

Quantum models can represent more complex functions than classical models, potentially leading to better generalization. However, trainability remains a challenge, as quantum circuits can suffer from issues like barren plateaus (regions of flat loss landscapes).

Computational Complexity:

Quantum algorithms can offer exponential or quadratic speedups for certain tasks, but not all ML problems are equally suited to quantum acceleration. Understanding the theoretical limits is crucial for effective QML research and application.

6. Challenges and Future Directions

Hardware Limitations:

Current quantum computers are noisy and have limited qubit counts. Research is ongoing to develop fault-tolerant, scalable quantum hardware.

Algorithm Development:

Many quantum ML algorithms are still in the experimental stage. Ongoing work focuses on improving robustness, scalability, and integration with classical systems.

Frontier Topics:

- Quantum Transformers and Large Language Models: Early research is exploring quantum versions of advanced AI architectures.

- Hybrid Quantum-Classical Systems: Combining the strengths of both paradigms for practical, near-term applications.

- Open Problems: Issues like error mitigation, efficient data encoding, and real-world deployment remain active research areas.

Conclusion

Quantum computing is set to profoundly impact the future of AI and machine learning. By integrating quantum algorithms with ML models, researchers and practitioners can unlock new levels of computational power, efficiency, and insight. While challenges remain, the field is advancing rapidly, and hands-on tutorials and resources are making it increasingly accessible. Whether you are a machine learning practitioner, AI researcher, or student, now is the perfect time to explore the exciting frontier of Quantum Machine Learning.